In a time of uncertain budgets, redesign, and shifting leadership, the foreign assistance and evaluation communities have some positive developments to embrace. The new Office of Management and Budget (OMB) guidelines on monitoring and evaluation (M&E) of foreign assistance show the Administration clearly understands and supports the value of M&E. The leading foreign assistance agencies are learning from their evaluation experience and are committed to building a culture of evaluation in the organizations.

There have been few opportunities for the public to hear directly from representatives of the Administration and the agencies on their foreign assistance policy priorities. This makes the event last month organized by the Modernizing Foreign Assistance Network (MFAN) and The Lugar Center a significant one. The event highlighted the MFAN and Lugar Center’s recent report on foreign assistance evaluation and US agency representatives shared the progress they’ve made since the report’s publication.

Robert Blair, Associate Director of National Security Programs, OMB, delivered keynote remarks. Kerry Bruce, Executive Vice President for Programs at Social Impact moderated the panel which included:

• Patricia Rader, US Agency for International Development (USAID)

• Thomas Kelly, Millennium Challenge Corporation (MCC)

• Gordon Weynand, US Department of State, F Bureau

Three key takeaways from the discussion stood out. 1) Real learning from evaluations is taking place. 2) There is a commitment to do more in the future. 3) The agencies have valuable advice to other agencies at the early stages of developing their evaluation policies.

The learning has already begun.

- From the start, MCC was designed to use evidence and data in its work. But they soon learned that in some cases what they set out to measure did not work out the way they intended. They remain committed to transparency and evaluation but have adjusted how they evaluate their programs to better understand their impact.

- USAID has had robust and dynamic evaluation policies for many years. But the agency has learned that having an evaluation policy is not enough to ensure a culture of evaluation. They learned it is necessary to have an explicit requirement to pause and learn from the evaluations and the data in them. Their collaboration, learning, and adapting (CLA) framework builds this reflection into their program cycle.

- The State Department learned that while in some instances its assistance work is like USAID’s, they require an evaluation policy more tailored to their development and diplomacy efforts. The department has made strides in developing a culture of evaluation in the organization.

The agencies promised more progress on evaluation in the future.

- MCC is committed to increasing accountability and learning by creating easier to digest, easier to find evaluation summaries, and more comprehensive analysis of their work.

- USAID is focused on learning from evaluations through CLA. They are collecting and disseminating more and more learning and adaptive management best practices through the USAID Learning Lab.

- State Department is working to improve dissemination of their evaluations, including posting more evaluations online.

The agencies offered advice to other foreign assistance agencies and implementers beginning to develop M&E policies.

- MCC emphasized the importance of setting expectations and creating external demand for transparency and accountability at the outset. Consulting with external stakeholders and civil society in the early stages of developing evaluation policy helps build a stronger policy and start this demand.

- USAID recommended integrating M&E from the start. Rather than thinking of evaluation of programs, it needs to be part of the strategy, program design, and implementation. USAID also advised being explicit about adaptive management, and training and supporting to staff to understand and expand M&E.

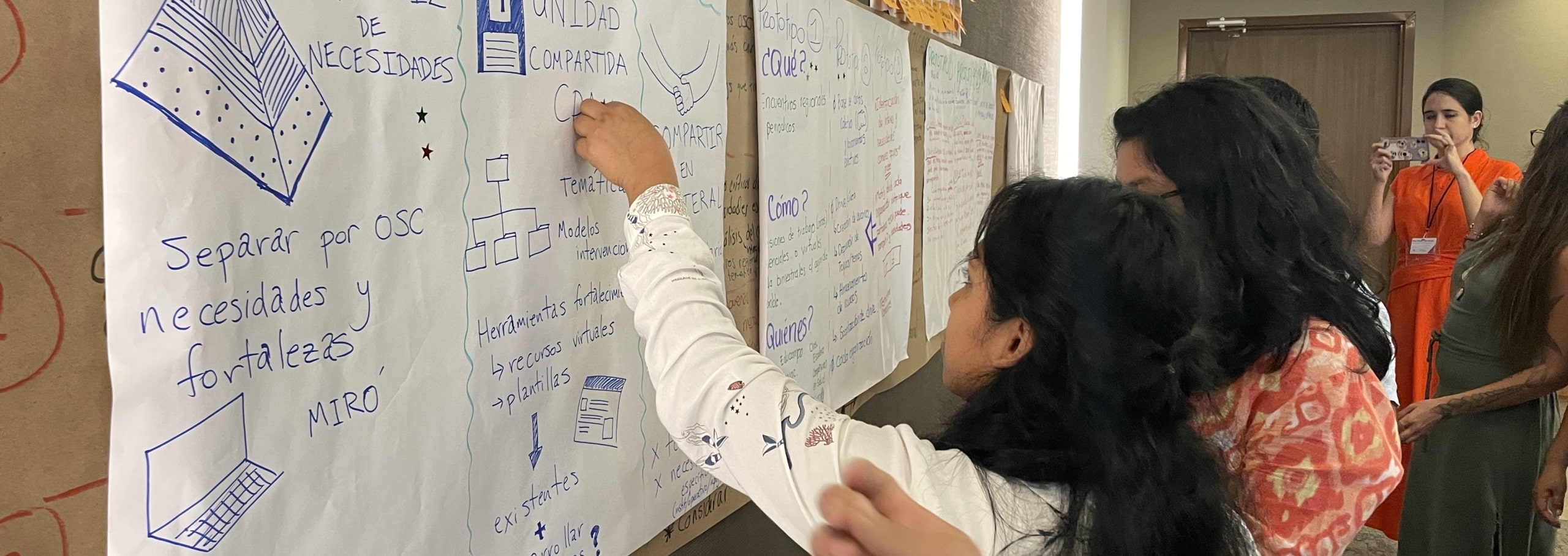

- State Department advised creating a community of practice to foster learning and build a culture of evaluation.