If you ask three evaluators – what is quality in an evaluation – you may very well get three different answers. You might get answers like – the evaluation follows the American Evaluation Association guidelines or meets the criteria set out by the OECD-DAC . Or the response may be an evaluation that answers the evaluation questions with methodological rigor. Another evaluator might say one that a quality evaluation is easy to read and understand and has clear advice on what needs to be sustained or changed for a program to improve.

A recent GAO report on foreign assistance evaluations, highlighted the need for quality in evaluations. In addition to identifying the need for sufficient and reliable evidence, the report emphasizes the importance of evaluations providing information useful for decision-making. “A high quality evaluation helps agencies and stakeholders identify successful programs to expand or pitfalls to avoid.”

Why does quality matter?

No one sets out to do poor quality work, but …

• Many evaluations are not useful for learning, accountability and program improvement.

• Evaluation users are often not committed to evaluation process and findings.

• Evaluation is seen as bureaucratic requirement rather than a valuable learning tool.

• Evaluation teams often produce weak or uneven work.

• Few teams can marry the process and content dimensions of evaluation.

• Opportunities to achieve greater development effectiveness and social impact may be missed.

What if there were one system that focused on both quality of the process and product and use of the final results? What would that system look like and how would it lead to quality?

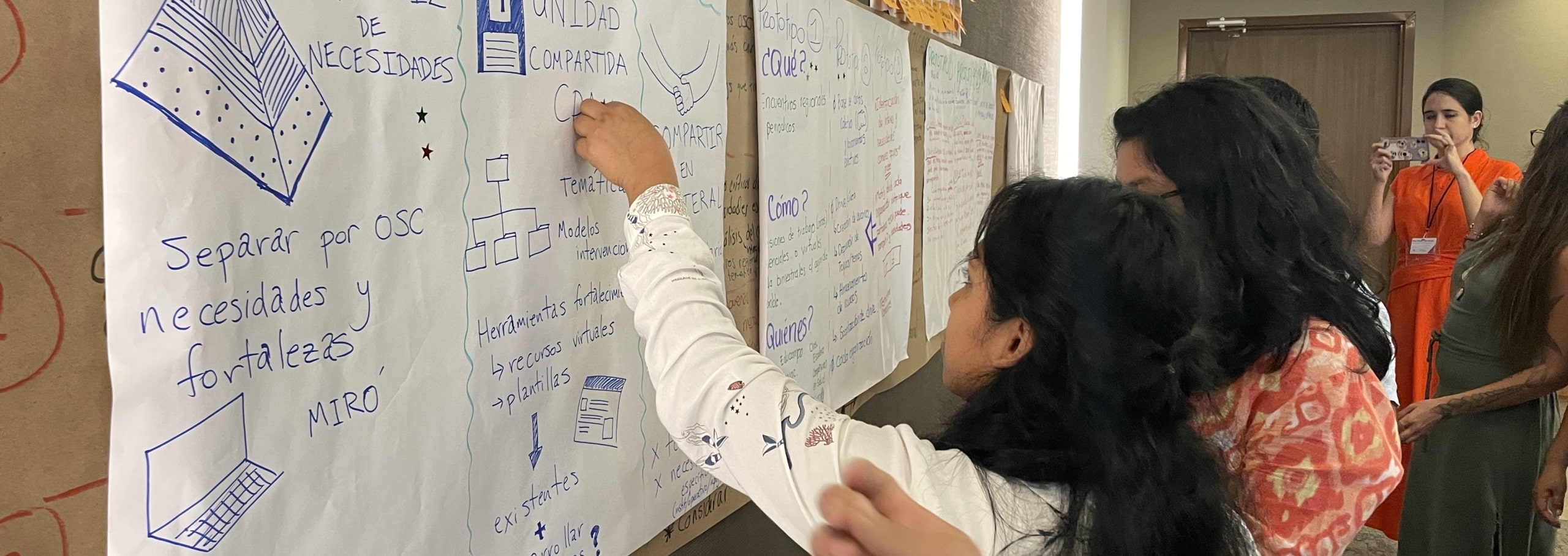

We developed a system for our evaluations at SI called EQUI (Evaluation Quality Use and Impact). This system starts with training every team leader and team member in the basic tenets of quality in evaluation through an online platform. Next – it provides a series of checklists that identify what we believe will define quality through five key parts of the process. Then we set key milestones for a utilization focus that start before the final report and focuses the client and the team on use from the outset. Finally, we have an online dashboard that allows us to track all our evaluations and where we are in the process and note possible delays problems.

For example, on a recent evaluation, we noted on the dashboard that the team leader had not submitted a draft findings, conclusions, and recommendations matrix prior to the outbrief with the client. This was a sign that the analysis was not on track and allowed our technical advisors to step in and provide assistance – ensuring a quality product.

In another evaluation – the technical advisor noted that there were some negative findings in the preliminary analysis and worked with the team leader to anticipate reactions and craft actionable recommendations for the client on these findings.

Another aspect of our EQUI system is our focus on evaluation use. Four-six months after each evaluation – we reach out to clients to find out how the evaluation has been used – both in intended and unintended ways. In one evaluation completed in 2015, we recently heard from the client that our report was being used by the parliament to draft policy in the health sector. While this was not our original intended use – we were pleased to see our work so well used!

Our EQUI system is far from perfect (we are developing EQUI 2.0 as we speak) but there are three things we love about this approach. First, it helps with early identification of problems with quality. Second, it helps us focus intentionally on evaluation use from the beginning and third, there are clear expectations from everyone on the team about the process and what quality means.