Developmental evaluation (DE) can be perceived as expensive within the donor and evaluation communities.[1] In fact, a study of all DEs conducted by USAID between 2010 and 2020 found cost was one of three frequently cited reasons for funders deciding against performing such an evaluation. However, an examination of what stakeholders believe DE’s value to be, rather than its cost, reframes this approach as often cost-effective, worthwhile, and for some programs, indispensable.

A review of the evidence on USAID-funded DE cost and cost-effectiveness reveals that:

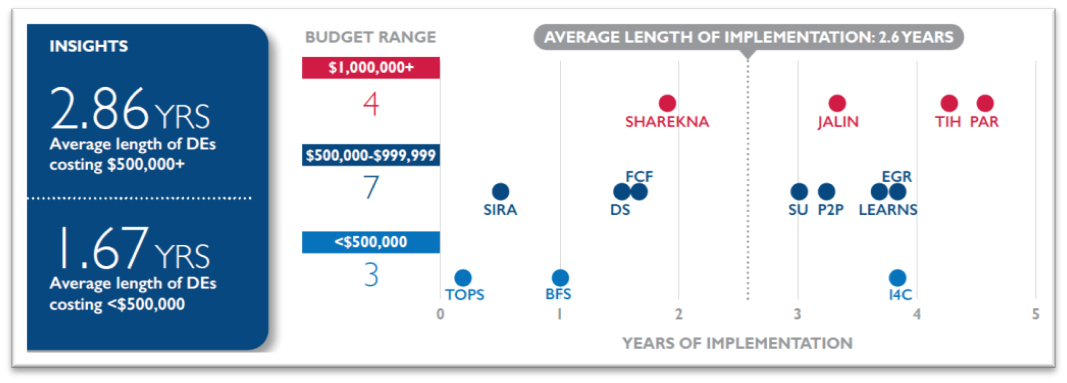

- Rather than being uniformly “expensive,” DE budgets vary significantly. Of the 14 DEs conducted by USAID between 2010 and 2020,[2] four cost over $1 million, seven cost $500,000-$1 million, and three cost less than $500,000. Their budgets depended on duration, staffing, activities, and locations with duration being a significant driver of DE cost (see graphic below).

- DE implementers are optimizing funding and duration by adapting team size and composition. DEs with budgets around $500,000 to $750,000 varied in term from less than a year to over three years and included teams of between three and ten evaluators and support staff. One of the lowest cost evaluations used a part-time evaluator for three years.

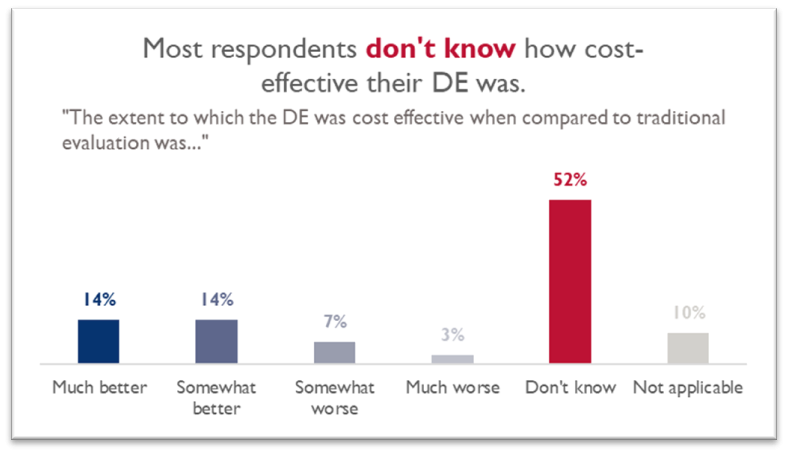

- Determining evaluation cost-effectiveness in general is difficult, including for DE. A survey of stakeholders from three DEs conducted by USAID’s DE Pilot Activity (DEPA-MERL) found that 52% of respondents do not know how cost-effective their DE was (see graph below). This is result understandable as typically few individuals have access to all data necessary for cost analysis. Of those who reported an opinion, more than twice as many reported DE is much or somewhat better than traditional evaluation in terms of cost-effectiveness (28%) than those who reported it was somewhat or much worse (10%).

- Additional investments in time and funding for ongoing DEs indicate USAID sees value in the approach. Of the 14 DEs conducted by USAID between 2010 and 2020, at least half (seven DEs) received extensions, and four of these received additional funding. The actual number may be greater than seven, as only nine of the 14 USAID DEs responded to this particular inquiry.

- Notable examples exist of DE’s impact outweighing its cost. For example, as a result of the DE for Family Care First (FCF) in Cambodia, USAID restructured awards to the program’s backbone organization and integrating partner, changing the implementing partners’ roles and responsibilities. In a USAID DEPA webinar, an informed panelist described this outcome, saying “Ultimately, the DE, even with the cost of the evaluation itself, ended up saving us substantial resources. Because of the findings from the DE, we ended up pivoting in a significant way… coalescing all of the work with one of the two partners.” Moreover, the DE for USAID Jalin won USAID’s 2021 CLA Case Competition for advising on potentially lifesaving innovations for maternal and newborn health.

- When selecting the most suitable type of evaluation for a program, it is best to consider their different purposes rather than costs. Impact evaluations, which can also be perceived as expensive, measure changes in outcomes attributable to a program, while formative and summative evaluations make judgments about a program’s efficacy. On the other hand, DE supports innovation, avoids judgments, and goes beyond delivering a report to help managers use recommendations to adapt and course correct. Ignoring these distinct purposes and deciding based solely on cost risks evaluating a program in a way that may be detrimental rather than useful.

Reframing the conversation about cost around DE’s value introduces critical factors like its impact, return on investment, and unique purpose. It also helps correct misconceptions about a relatively new form of evaluation, which USAID added to its Operational Policy for the Program Cycle (ADS 201) in October 2019 as a type of performance evaluation. Ultimately, it enables funders and practitioners make better informed decisions about what type of evaluation fits their program’s needs.

[1] For background on the DE approach in general, please see the resources available on USAID’s Developmental Evaluation Pilot Activity (DEPA-MERL) website: https://www.usaid.gov/PPL/MERLIN/DEPA-MERL

[2] These DEs include the performance mid-term evaluation of the Selective Integrated Reading Activity (SIRA), the DE for the Sharekna Project to Empower Youth and Support Local Communities, the DE on the Alliances for Reconciliation Activity (PAR), DE to Strengthen USAID Integrated Health Service Delivery in Tanzania (TIH), Evaluative Learning Review Synthesis Report: USAID/CMM’s People-to-People Reconciliation Fund (P2P), DE for USAID LEARNS contract, Technical and Operations Performance Support (TOPS) Mid-Term Evaluation, DE for Early grade reading (EGR), DE for Innovation for Change (I4C), Bureau for Food Security DE pilot (BFS), Family Care First DE Pilot (FCF), USAID Sustained Uptake DE (SU), USAID/Center for Digital Development DE (DS), and the DE for USAID Jalin in Indonesia (Jalin).

Photo: Pixabay, Pexels